Reinforcement Learning in Robotics- A Survey

Author: Jens Kober

Created: Jan 01, 2020 8:54 AM

Status: Reading

Tags: Reinforcement Learning

Type: Paper

Introduction

Brief summary

RL gives two things?

framework,toolsfor the designsophisticated&hard-to-engineerbehavior.Paper’s Focus?

1.

Model basedandfree;value function basedandpolicy searchmethods.

How it works apparently? in detail?

trial-and-error to discover autonomously.

provide

feedbackin terms of ascalar objective functionthat measuresone-stepperformance.

what called it “policy”?

A function π that generates the

motor commands. It based on some practical circumstances.RL’s aim?

to find a policy that

optimizesthe long term sum ofrewards(algorithm is to find a near optimal policy).

RL in ML

Supervised Learning & Imitation Learning?

- supervised L: a sequence of independent examples

- Imitation L: follow in GIVEN situations

What is the Reduction algorithm?

One

provensolution in some area,converting it into another, is the key for ML.a CRUCIAL fact in learning application?

existed learning tech, reduce problems to

simpler classification.Active researchsolves it.what information provided for RL?

chosenaction, not what - might - have - been.supervised learning’s problem?

any mistakesmodify the future observation.the same and different compared with imitation learning?

same: both

interactive,sequentialpredictiondifferent: complex

rewardstructures ( with only bandit style feedback on the actions actually chosen) . leadingtheoreticallyandcomputationally hard.same between RL and classical optimal control?

- to find an

optimal policyalso called the controller or control policy. - rely on a notion that is a

modeldescribestransitions between states.

- to find an

different between RL and classical optimal control?

classical:

break downbecause of model and computational approximations.RL: operate on

measured dataand rewards frominteractionwith theenviornment.it uses

approximationsanddata-driventech.

RL in Robotics

- 2 interesting points in robot learning sys?

- often uses policy search

- many based on model based. Not for every adapted.

- 2 interesting points in robot learning sys?

Techs

brief summary

basic work process?

maximize the accumulated reward, the task is

restartedafter each end of an episode.If it doesNOThaveclear beginning, optimizing thewhole life-time&discounted return.reward R based on ?

a function of the

stateandobservation.what is the

GOALof RL?to find a

mappingfrom states to actions( policy π ).2 form of policy π? detail?

deterministic or probabilistic. D mode uses exact

sameaction for a given state; P modedraws a sample from a distribution.Why RL needs the

Exploration? where it can be found?because the relations between states, actions, and rewards.

- directly embedded in the

policy - separately and only as part of the

learning process.

- directly embedded in the

Basic method in RL?

Markov Desicsion Process ( MDP ).

S: State;

A: Action;

R: Reward;

T: Transition probabilities or densities in the continuous state case

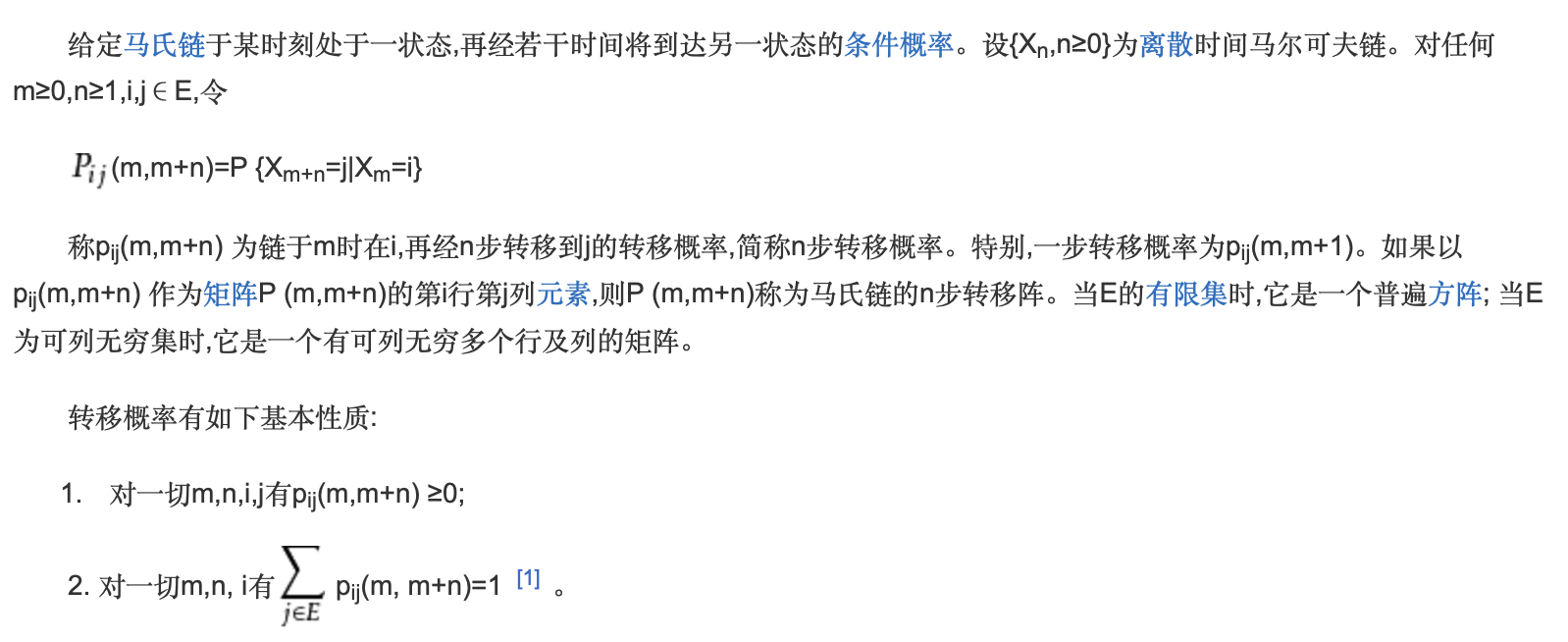

越迁概率

转移概率是马尔可夫链中的重要概念,若马氏链分为m个状态组成,历史资料转化为由这m个状态所组成的序列。从任意一个状态出发,经过任意一次转移,必然出现状态1、2、……,m中的一个,这种状态之间的转移称为转移概率。

the Meaning of

T (s′, a, s) = P (s′|s, a)?next state s

** and the rewardonly depend`on the previous state **s and action a.two different types for using rewards function’s depending?

- only on the current state R=R(s, a)

- on the transitions R = R( s`,a, s).

Goals of RL

to discover an optimal policy π∗ that maps states (or observations) to actions so as to maximize the expected return J.

finite-horizon model

maximize the expected reward .

a discount factor

γ- affect how much the future is taken into account

- tuned manually

γcould lead some problemscharacteristic:

myopic

greedy

poor performance, unstable optimal control law( low discount factor ).

conclusion:

inadmissible

average reward criterion

γ replaced by

1/Hproblems:

- cannot distinguish whether

initiallycould get high or low rewards

- cannot distinguish whether

bias optimal Lewis and Puterman

- definition

- optimal prefix & optimal long-term behavior.

- prefix = transient phase

- definition

a characteristic in RL:

same long-term reward but differ in transient in different policy

important shortcoming & most relevant model

important shortcoming:

— discounted formulation ( not average reward )

- Reason: stable behavior not good transient

an episodic control task, which runs H time-steps; reset; started over.

H, guaranteed to converge for the expected reward.

finite-horizon model are most

frequentso, finite-horizon models are often the most relevant.

basic goals: (2)

- optimal strategy

- maximize the reward

“ exploration - exploration trade off “ :

- whether to play it

safeand stick towell known actionswithhigh rewards - or to dare trying

newthings in order to discovernew strategieswith evenhigh reward.

- whether to play it

“ curse of dimensionality”:

continuous, scale exponentially accelerate for state variables.

off - policy & on - policy:

off - policy:

different from the desired final policy

on - policy:

information about the environment

great implication caused by probability distributions

stochastic policies —> optimal stationary policies for selected problems ( may break curse of dimensionality )

RL in the Average Reward Setting( ARS )

2 situations leads ARS to more suitable

- not to choose a discount factor

- not to have to explicitly time

Policy 𝜋’s feature

stationary & memory less

RL’s aim

maximize 𝜋 & 𝜃

policy search & value function - based approach’s definition

policy search:

optimizing in the **

primal**formulationsearching in the

dual formulation

Value Function Approaches

Karush - Kuhn - Tucker conditions:

- It means there are as many equations as the number of states multiplied by the number of actions.

Bellman Principle of Optimality :

Definition:

forget initial state and policy, the remaining decisions must constitute an optimal policy with regard to the state resulting from the first decision.

Method:

perform an optimal *action a,** follow the optimal policy 𝜋* to achieve global optimum.

Conclusion:

optimal value function V* corresponds to the long-term additional reward, gained by starting in states while taking *optimal actions a.**

traditional RL approaches:

identifying solutions to this equation ( value funtion ).

steps:

approximateLagrangianmultiplierV* ( value function )reconstructthe optimal policyALERT:

action a* decided by policy 𝜋.

Q𝜋 can be instead of Vπ, it differ because obviously

showthe effect ofparticular action.conclusion:

to choose

a*could reconstruct anoptimal, deterministic policyπ* to achievehighest V*V, T ( known, discrete ) — > optimal policy ( as exhaustive search )

!!! : continous space — > policy, function were shown as a chart ( states, action are discrete ). if space too big, to reduce its dimension ( if possible ).

- using Q to avoid using Transition function.

Dynamic Programming-Based Methods ( model - based )

- T, R —> value function

- NOT predetermined, learned from data, potentially incrementally

TYPICAL METHODS:Policy iteration

two phases:

policy evaluation:

determines the value function for the current policy

policy improvement:

greedily selects the best action in every state

Value iteration

- combines policy evaluation and policy improvement

Monte Carlo Methods

Temporal Difference Methods

Challenges

- Curse of Dimensionality

- Curse of Real-World Samples

- Curse of Under-Modeling and Model Uncertainty

- Curse of Goal Specification

Approaches

Tractability Through Representation

- Smart State-Action Discretization

- Value Function Approximation

- Pre-structured Policies

Tractability Through Prior Knowledge

- Prior Knowledge Through Demonstration

- Prior Knowledge Through Task Structuring

Tractability Through Models

- Core Issues and General Techniques in Mental Rehearsal

- Successful Learning Approaches with Forward Model